Artificial Intelligence: Time to Move the Cursor

The underlying goal of artificial intelligence (AI) is to simulate human intelligence. This is a modest objective that is restrained by our limited ability of deduction and an incomplete definition of intelligence. In simple terms artificial intelligences correspond to a delimited frontier that only covers the available and supposedly persisting knowledge at a point in time.

As computational power increases and technologies advance at exponential rates, why should we limit ourselves to a simulation of the intelligence that already exists? If we can set our fears of robots taking over the world aside, isn’t our goal really to surpass the cognitive limits of our species through the creation of the intelligence necessary to achieve a better, more stable future and lifestyle-free from the current health, economic, environmental and geopolitical flaws?

Artificial Intelligence Is Hindered by the Limits of Human Intelligence

With all the intricacies our hyper connected world, no human-regardless of his or her intelligence, years of experience or academic achievements-can fully understand the set of actions required to create this better path forward. Our world has grown too dynamically complex for any individual or statistical model to accurately assess. Instead business and government leaders rely on an army of subject matter experts, who may be the top in their field, but possess just a portion of the necessary knowledge and foresight. Each domain analyzes a situation using their own methods, technologies and biases.

Actions are taken based on a conglomerate of constrained and often contradicting viewpoints limited by imagination and history. Surprises are inevitable because we are missing critical information that would reveal how one change-no matter how seemingly insignificant-can produce a ripple effect of unintended consequences. It may be sufficient to notice that most of the dramatic surprises that have occurred over the last decades point to knowledge domains that are partial, sometimes wrong or in number of cases due to instability in analysis. This is a far from perfect approach.

For example, in examining the financial crisis of 2007-2008, we found that dynamic complexity, which formed due to multiple dependencies within the housing market, equity, capital market, corporate health, and banking solvency, in turn impacted the money supply and ultimately caused massive unemployment and a severe global recession. The economic risk posed by these interdependencies could not be reliably predicted based on human intelligence or statistical analysis because the system dynamics introduced by subprime loans and mortgage backed securities were not well understood and foreclosure rates had never exceeded 3%. No simulation of human intelligence, no matter how advanced, would be able to cure this problem.

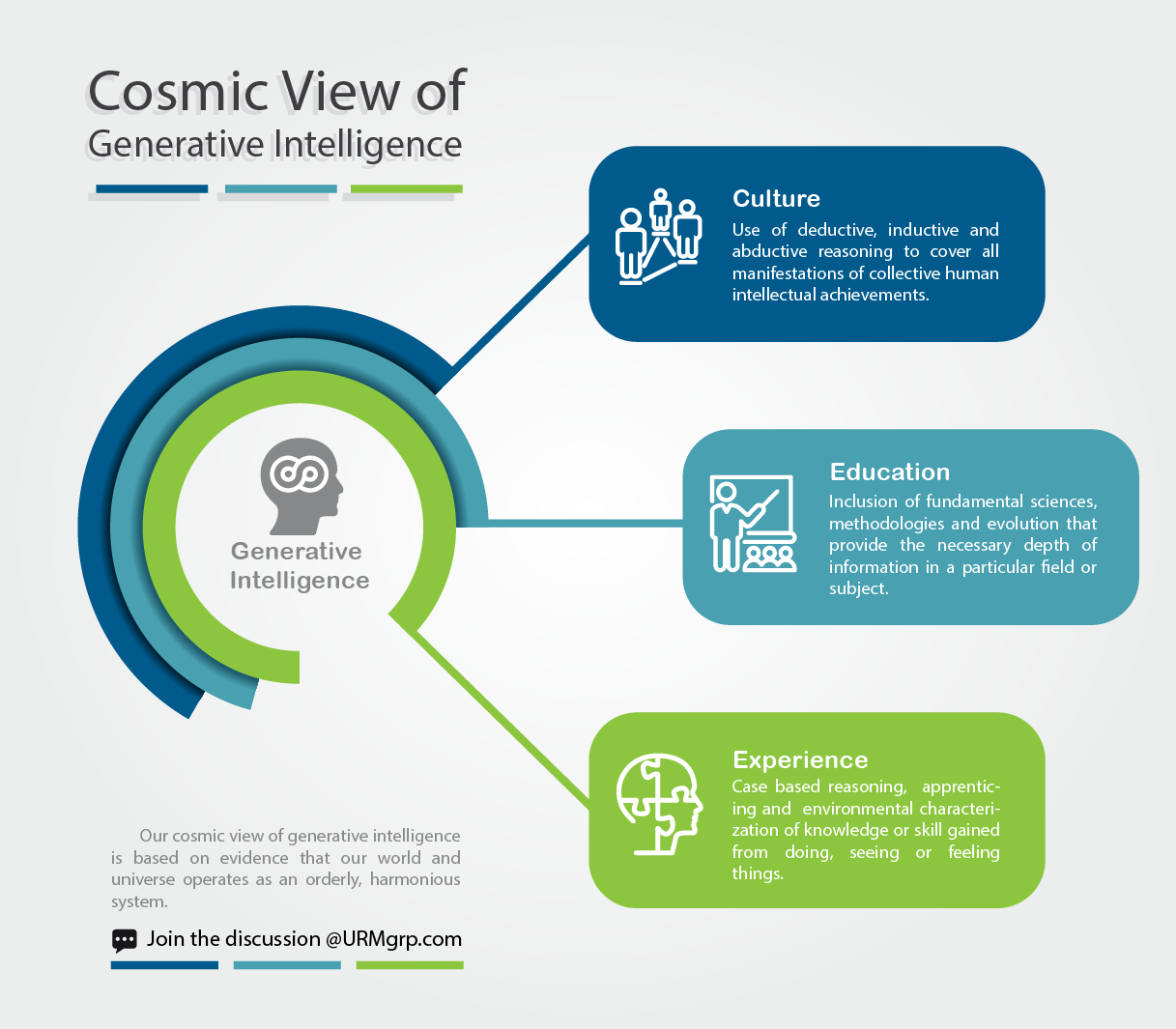

Generative Intelligence Expands the Aim to Cover Unknowns

To thrive in world that is constantly morphing due to the acceleration of innovation and the velocity of disruption, we must move the AI cursor to generate a wider domain of intelligence. Capturing and automating known patterns of behaviors will always be an important, but we must expand beyond the limits of statistical models and human intelligence, using mathematical emulation, and advanced algorithms to better understand the behavior of complex systems and discover the circumstances that can cause a breakdown or unwanted outcomes.

By making the unknowns, known, we can achieve a wider and more profound objective that releases intelligence from the confines of historical patterns. As AI moves from hype to reality, generative intelligence will be the next frontier. Generative intelligence pairs human perception and decision making capabilities of artificial intelligence with the scientific disciplines of dynamic complexity and perturbation theory to create a systemic and iterative collection of knowledge, which will become the right synthesis for the human progress.

Generative intelligence will provide the rational and unbiased mechanisms to advance multi-stakeholder, systems thinking in ways that harnesses the opportunities of the Fourth Industrial Revolution. If we want to build a better world, where accountability is the safety net for a sound economy, viable systems and greater human prosperity, we must expand our the domain of intelligence to cover both the knowns and unknowns-and then ready ourselves for action.

Supporting the Move to a Wider Domain of Intelligence

The cultural shift required to handle risk more completely and effectively can start at the local level with individual system owners, but ultimately a top down approach will be required to bring systems as wide as economy under better control through the prediction, diagnosis, remediation and surveillance of dynamic complexity related risks.

Only a mix of experience accumulation, evolution in culture and proper education can fulfil such a foundational objective. We must work together to see that new risk management methods and practices that address the unique demands of our modern era are implemented. And we must demand accountability from any business, government, regulatory body or other organization that does not take the path towards this progress.

For those that recognize the urgency of finding a solution, we invite you to join the pursuit of generative intelligence and learn from your colleagues that have committed themselves to this path at https://urmforum.org/urm-forum/.