Posts

Taking a Computer-Aided Engineering Approach to Business Transformation

/in Business Transformation /by Nabil Abu el AtaChanging competitive landscapes, technological advances and consumer trends are rapidly disrupting businesses and creating an imperative to execute transformation programs that improve operational efficiencies, optimize workforces and offer better customer experiences. Across industries vast amounts of resources are being spent on business transformation programs, but so far the results are dismal. According to IDC, $1.7 trillion was spent in 2017 by companies worldwide on their digital transformation efforts and yet analysis suggests that only 1% of transformation programs will actually achieve or exceed their expectations.

Business transformations are pursued to fundamentally change systems, processes, people and technology across an entire business or subdivision to achieve measurable improvements in efficiency, effectiveness and stakeholder satisfaction. When transformation programs fail, time and money are wasted, while no significant improvements are gained. In the meantime, risks related to product or service obsolescence, rising costs, declining revenues or other factors that spurred the transformation in the first place, have grown.

Intelligent Business Transformation

Taking a computer-aided engineering (CAE) approach to business transformation can help businesses manage program risks, maximize value, decrease costs and accelerate innovation. CAE is widely used in a range of industries to build the right product at best performance versus cost ratio. A main goal being to improve designs or anticipate the resolution of potential problems as early as possible.

Using digital twin emulation-based capabilities, we are helping businesses achieve the same benefits of CAE in their transformation programs:

- Make business transformation decisions based on key metrics including performance, time to market and cost

- Manage risk and communicate the long-term business implications of any decisions to all stakeholders

- Use computer emulation rather than real world testing to save money and time—while supporting a broader scope of upfront validations

- Gain risk and performance insights earlier in the transformation process, when changes are less expensive to make

- Explore innovative solutions that may not have been considered plausible without the aid of computer emulation

Reliable Predictive & Prescriptive Intelligence

When businesses want to build best in class systems or ensure the optimal performance of exiting business ecosystems, computer emulation is an effective way to validate plans, predictively expose risks, and identify which corrective actions will achieve the desired results for any given situation. Algorithmically emulating the digital twin of business structures supports advanced predictive analysis to determine the limits of dynamically complex systems, identify potential risks and recommend which corrective actions are needed to meet business objectives.

While CAE typically uses simulation to replicate system behaviors, these methods are not sufficient to cover a change in dynamics, meaning some risks will not be exposed until the event occurs because only the knowns are represented and so reproduced. We use emulation in place of simulation to mathematically reproduce risks that may occur under certain conditions even if there is no historical record of these events happening.

Quickly & Economically Explore All Options

Once the emulation is successfully created, it allows transformation teams to quickly test and economically explore an unlimited number of change scenarios that would otherwise be complex, expensive or even impossible to test on a real system. Users simply change variables—such as volume, architecture and infrastructure—or perform sensitivity predictions on changing process dynamics to observe the predicted outcome.

Validated through robust testing in hundreds of applications and various industries, our approach provides visibility across complex business and IT ecosystems so that decision makers can quickly agree upon the most strategic plans and proactively take corporate actions with confidence in the outcome. Additionally, these methods support transformation programs by enabling users to test optimization and rationalization scenarios to confirm the best-fit solution before committing funds or resources to any project.

Manage the Transformation Project Outcome

During the execution phase, the digital twin provides the foresights necessary to ensure an optimal outcome that weighs both short and long-term cost benefits in alignment with performance and scalability goals so that the business can minimize risks and consistently gain all the intended benefits of the transformation program. Ultimately, these capabilities help businesses define and make the right moves at the right time to continuously achieve better economy, control risk and support critical renewal.

Learn more about Business Transformation

Read the Intelligent Business Transformation solution paper to learn why and how businesses use a Computer-Aided Engineering (CAE) capacity to validate transformation plans and control risks.

Read the Intelligent Business Transformation solution paper to learn why and how businesses use a Computer-Aided Engineering (CAE) capacity to validate transformation plans and control risks.

Time to Reinvent How We Manage Risk | Business Vision Magazine

/in News Articles /by Valerie DriessenRisk Management in the Age of Digital Disruption

/in Dynamic Complexity /by Nabil Abu el AtaToday, as the repercussions of the Fourth Industrial Revolution begin to take hold, many corporations are fighting hidden risks that are engendered by the obsolescence of their cost structures, outdated business platform and practices, market evolution, client perception and pricing pressures that demand some form of action. But change is often a difficult choice as it implies a certain degree of unknown risk.

Current risk management practices, which deal mostly with the risk of reoccurring historical events, cannot help business, government or economic leaders deal with the uncertainty and rate of change driven by the Fourth Industrial Revolution. As new innovations threaten to disrupt, business leaders lack the means to measure the risks and rewards associated with the adoption of new technologies and business models. Established companies are faltering as leaner and more agile start-ups bring to market the new products and services that customers of the on-demand or sharing economy desire—with better quality, faster speeds and/or lower costs than established companies can match.

Due to the continuous adaptations driven by the Third Industrial Revolution, most organizations are now burdened by inefficient and unpredictable systems. Even as the inherent risks of current systems are recognized, many businesses are unable to confidently identify a successful path forward.

PwC’s 2015 Risk in Review survey of 1,229 senior executives and board members, reports that 73% of respondents agreed that risks to their companies are increasing. However, the survey shows companies are not, largely, responding to increasing threats with improved risk management programs. While executives are eager to confront business risks, boost management effectiveness, prevent costly misjudgments, drive efficiency and generate higher profit margin growth, only an elite group of companies (12% of the total surveyed) have put in place the processes and structures that qualify them as true risk management leaders per PwC’s criteria.

The shortcomings of traditional risk management technologies and probabilistic methodologies are largely to blame. According to Delloitte’s 2015 Global Risk Management Survey, 62% of respondents said that risk information systems and technology infrastructure were extremely or very challenging. Thirty-five percent of respondents considered identifying and managing new and emerging risks to be extremely or very challenging.

To remain competitive, companies must pursue two parallel strategies:

- Building agile and flexible risk management frameworks that can anticipate and prepare for the shifts that bring long-term success.

- Building the resiliency that will enable those frameworks to mitigate risk events and keep the business moving toward its goals.

While many risk management and business management experts agree on the need for better risk management methods and technologies, their proposed use of probabilistic methods of risk measurement and reliance on big data cannot fulfill the risk management requirements of the twenty-first century.

Many popular analytic methods are supported by nothing more than big data hype, which promises users that the more big data they have, the better conclusions they will be able to ascertain. However, if the dynamics of a system is continuously changing, any analysis based on big data will only be valid for the window of time during which the data was captured. Outside this window of time, an alignment with reality is unlikely.

To respond to the rate of change engendered by the Fourth Industrial Revolution, the practice of risk management must mature and become a scientific discipline. Our work with clients and research exposes the failures of traditional risk management practices. But many are not aware there is a better way to discover and treat risk. Ultimately, we will need more collaborators and partners who wish to teach business leaders and risk practitioners how new universal risk management approaches and mathematical-based emulation technologies can be used to identify new and emerging risks and prescriptively control business outcomes. If you are interested in joining our cause, please contact us as we are always looking for new research, technology and service partners.

How Dynamic Complexity Disrupts Business Operations

/in Dynamic Complexity /by Nabil Abu el AtaDynamic complexity always produces a negative effect in the form of loss, time elongation or shortage—causing inefficiencies and side effects, which are similar to friction, collision or drag. Dynamic complexity cannot be observed directly, only its effects can be measured. Additionally, dynamic complexity is impossible to predict from historic data—no matter the amount—because the number of states tend to be too large for any given set of samples. Therefore, trend analysis alone cannot sufficiently represent all possible and yet to be discovered system dynamics.

In the early stages, dynamic complexity is like a hidden cancer. Finding a single cancer cell in the human body is like looking for a specific grain of sand in a sandbox. And like cancer, often the disease will only be discovered once unexplained symptoms appear. To proactively treat dynamic complexity before it negatively impacts operations, we need diagnostics that can reliably reveal its future impact. System modeling and mathematical emulation allow us to provoke the existence of dynamic complexity through two hidden exerted system properties: the degree of interdependencies among system components and the multiple perturbations exerted by internal and external influences on both components and the edges connecting them directly or indirectly.

Successful risk determination and mitigation is dependent on how well we understand and account for dynamic complexity, its evolution, and the amount of time before the system will hit the singularity (singularities) through the intensification of stress on the dependencies and intertwined structures forming the system.

Knowing what conditions will cause singularities allows us to understand how the system can be stressed to the point at which it will no longer meet business objectives, and proactively put the risk management practices into place to avoid these unwanted situations.

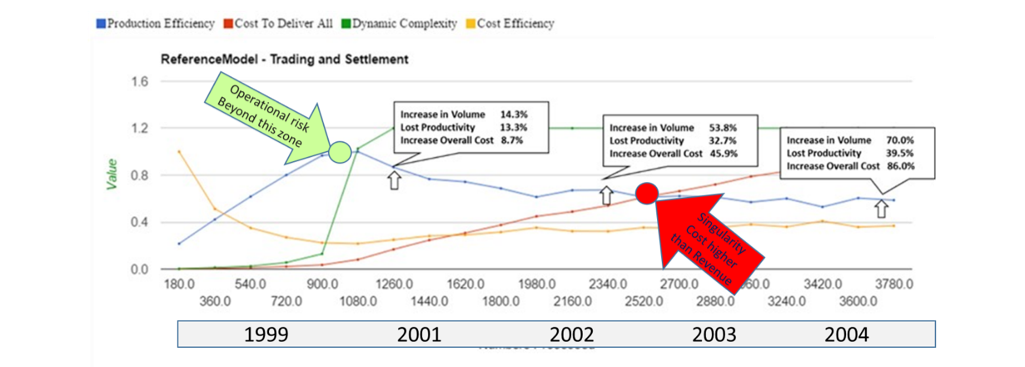

Below we provide an example of a client case where dynamic complexity played a key role in terms of resource consumption, time to deliver, and volume to deliver. The scenario presented in the graph represents a trading and settlement implementation used by a volume of business that continuously increases. The reaction of the system is shown by the curves.

In the graph above, the production efficiency increases until it hits a plateau after which the business is increasingly impacted by a slowdown in productivity and increase in costs. The amount of loss is proportional to the increase in dynamic complexity, which gradually absorbs the resources (i.e. the cost) to deliver little. The singularity occurs when the two curves (productivity/revenue and cost) join, which in turn translates into loss in margin, over costing and overall instability.

In client cases such as this one, we have successfully used predictive emulation to isolate the evolving impact of dynamic complexity and calculate risk as an impact on system performance, cost, scalability and dependability. This allows us to measure changes in system health, when provoked by a change in dynamic complexity’s behavior under different process operational dynamics and identify the component(s) that cause the problem.

But knowing the how and what isn’t sufficient. We also need to know when, so we measure dynamic complexity itself, which then allows us to monitor its evolution and apply the right mitigation strategy at the right time.

Optimal Business Control

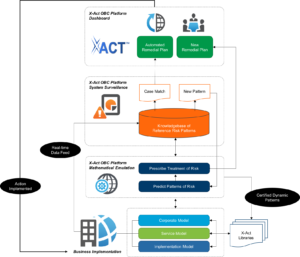

/in Key Concepts /by Nabil Abu el AtaOptimal business control (OBC) is a set of management, data collection, analytics, machine learning and automation processes through which management predicts, evaluates, and, when necessary, responds to mitigate dynamic complexity related risks that hinder the realization of business goals.

OBC is enabled by the X-Act OBC Platform to support the goals of universal risk management (URM) through the predictive analysis and prescriptive treatment of business risks. Using the quantitative and qualitative metrics supported by X-Act OBC Platform, users can proactively discover risks that may cause situations of system deterioration. Using this knowledge, systems can then be placed under surveillance to enable right-time risk alerts and preemptive fixing of any identified problems.

Through the use of a knowledge library and machine-learning sciences, X-Act OBC Platform enables users to define the optimal treatment of risk and use this knowledge to feed a decision engine that organically evolves to cover new and increasingly complex behavioral scenarios.

Through the use of a knowledge library and machine-learning sciences, X-Act OBC Platform enables users to define the optimal treatment of risk and use this knowledge to feed a decision engine that organically evolves to cover new and increasingly complex behavioral scenarios.

X-Act OBC Platform uses situational data revealed through causal analysis and stress testing to provide surveillance of systems and identify cases of increasing risk. These cases are unknowns in big data analytical methods, which are limited to prediction based on data collected through experience. Within the OBC database, a diagnosis definition and remediation plan—that covers both the experience-based knowns and those that were previously unknown—are stored together. This allows for the rapid identification of a potential risk with immediate analysis of root causes and proposed remedial actions.

This approach to right-time risk surveillance, represents a real breakthrough that alleviates many of the pains created by the traditional long cycle of risk management, which starts with problem-analysis-diagnosis and ends with eventual fixing well beyond the point of optimal action. OBC represents a clear advantage by shortening the time between the discovery and remediation of undesirable risks.

As the database is continuously enriched by the dynamic characteristics that continuously evolve during a system’s lifetime, the knowledge contained within the database becomes more advanced. OBC is also adaptive. By continuously recording within the OBC database foundational or circumstantial system changes, the predictive platform will identify any new risk, determine the diagnosis and define the remedial actions, and finally enhance the OBC database with this new knowledge.

Companies with the most mature OBC practices and robust knowledge bases will be able to confidently define and make the right moves at the right time to achieve better economy, control risks and ultimately create and maintain a competitive advantage.

New Book from Dr. Abu el Ata Offers A New Framework to Predict, Remediate and Monitor Risk

/in Press Releases /by Valerie Driessen“The Tyranny of Uncertainty” is now available for purchase on Amazon.com

Omaha, NE—May 18, 2016–Accretive Technologies, Inc. (Accretive) announces the release of a new book, “The Tyranny of Uncertainty.” Accretive Founder and CEO, Dr. Nabil Abu el Ata, jointly authored the book with Rudolf Schmandt, Head of EMEA and Retail production for Deutsche Bank and Postbank Systems board member, to expose how dynamic complexity creates business risks and present a practical solution.

The Tyranny of Uncertainty explains why traditional risk management methods can no longer prepare stakeholders to act at the right time to avoid or contain risks such as the Fukushima Daiichi Nuclear Disaster, the 2007-2008 Financial Crisis, or Obamacare’s Website Launch Failure. By applying scientific discoveries and mathematically advanced methods of predictive analytics, the book demonstrates how business and information technology decision makers have used the presented methods to reveal previously unknown risks and take action to optimally manage risk.

Further, the book explains the widening impact of dynamic complexity on business, government, healthcare, environmental and economic systems and forewarns readers that we will be entering an era chronic crisis if the appropriate steps are not taken to modernize risk management practices. The presented risk management problems and solutions are based upon Dr. Abu el Ata’s and Mr. Schmandt’s decades of practical experience, scientific research, and positive results achieved during early stage adoption of the presented innovations by hundreds of global organizations.

The book is available to order on amazon.com at https://www.amazon.com/Tyranny-Uncertainty-Framework-Predict-Remediate/dp/3662491036/ref=sr_1_1.

The methodologies and innovations presented in this book by Dr. Abu El Ata and Mr. Schmandt are now in various stages of adoption with over 350 businesses worldwide and the results have been very positive. Businesses use the proposed innovations and methodologies to evaluate new business models, identify the root cause of risk, re-architect systems to meet business objectives, identify opportunities for millions of dollars of cost savings and much more.

About Accretive

Accretive Technologies, Inc. offers highly accurate predictive and prescriptive business analytic capabilities to help organizations thrive in the face of increasing pressures to innovate, contain costs and grow. By leveraging the power of Accretive’s smart analytics platform and advisory services, global leaders in financial, telecommunications, retail, entertainment, services and government markets gain the foresight they need to make smart transformation decisions and maximize the performance of organizations, processes and infrastructure. Founded in 2003 with headquarters in New York, NY and offices in Omaha, NE and Paris, France, Accretive is a privately owned company with over 350 customers worldwide. For more information, please visit http://www.acrtek.com.

Perturbation Theory

/in Key Concepts /by Nabil Abu el AtaPerturbation theory provides a mathematical method for finding an approximate solution to a problem, by starting from the exact solution of a related problem. A critical feature of the technique is a middle step that breaks the problem into “solvable” and “perturbation” parts. Perturbation theory is applicable if the problem at hand cannot be solved exactly, but can be formulated by adding a “small” term to the mathematical description of the exactly solvable problem.

Background

Perturbation theory supports a variety of applications including Poincaré’s chaos theory and is a strong platform to deal with the dynamic behavior problems . However, the success of this method is dependent on our ability to preserve the analytical representation and solution as far as we are able to afford (conceptually and computationally). As an example, I successfully applied these methods in 1978 to create a full analytical solution for the three-body lunar problem[1].

In 1687, Isaac Newton’s work on lunar theory attempted to explain the motion of the moon under the gravitational influence of the earth and the sun (known as the three-body problem), but Newton could not account for variations in the moon’s orbit. In the mid-1700s, Lagrange and Laplace advanced the view that the constants, which describe the motion of a planet around the Sun, are perturbed by the motion of other planets and vary as a function of time. This led to further discoveries by Charles-Eugène Delaunay (1816-1872), Henri Poincaré (1854 – 1912), and more recently I used predictive computation of direct and indirect planetary perturbations on lunar motion to achieve greater accuracy and much wider representation. This discovery has paved the way for space exploration and further scientific advances including quantum mechanics.

How Perturbation Theory is Used to Solve a Dynamic Complexity Problem

The three-body problem of Sun-Moon-Earth is an eloquent expression of dynamic complexity whereby the motion of planets are perturbed by the motion of other planets and vary as a function of time. While we have not solved all the mysteries of our universe, we can predict the movement of a planetary body with great accuracy using perturbation theory.

During my doctorate studies, I found that while Newton’s law is ostensibly true in a simple lab setting, its usefulness decreases as complexity increases. When trying to predict the trajectory (and coordinates at a point in time) of the three heavenly bodies, the solution must account for the fact that the gravity attracts these bodies to each other depending on their mass, distance, and direction. Their path or trajectory therefore undergoes constant minute changes in velocity and direction, which must be taken into account at every step of the calculation. I found that the problem was solvable using common celestial mechanics if you start by taking only two celestial bodies, e.g. earth and moon.

But of course the solution is not correct because the sun was omitted from the equation. So this incorrect solution is then perturbed by adding the influence of the sun. Note that the result is modified, not the problem, because there is no formula for solving a problem with three bodies. Now we are closer to reality but still far from precision, because the position and speed of the sun, which we used was not its actual position. Its actual position is calculated using the same common celestial mechanics as above but applied this time to the sun and earth, and then perturbing it by the influence of the moon, and so on until an accurate representation of the system is achieved.

Applicability to Risk Management

The notion that the future rests on more than just a whim of the gods is a revolutionary idea. A mere 350 years separate today’s risk-assessment and hedging techniques from decisions guided by superstition, blind faith, and instinct. During this time, we have made significant gains. We now augment our risk perception with empirical data and probabilistic methods to identify repeating patterns and expose potential risks, but we are still missing a critical piece of the puzzle. Inconsistencies still exist and we can only predict risk with limited success. In essence, we have matured risk management practices to the level achieved by Newton, but we cannot yet account for the variances between the predicted and actual outcomes of our risk management exercises.

This is because most modern systems are dynamically complex—meaning system components are subject to the interactions, interdependencies, feedback, locks, conflicts, contentions, prioritizations, and enforcements of other components both internal and external to the system in the same way planets are perturbed by other planets. But capturing these influences either conceptually or in a spreadsheet is impossible, so current risk management practices pretend that systems are static and operate in a closed-loop environment. As a result, our risk management capabilities are limited to known risks within unchanging systems. And so, we remain heavily reliant on perception and intuition for the assessment and remediation of risk.

I experienced this problem first hand as the Chief Technology Officer of First Data Corporation, when I found that business and technology systems do not always exhibit predictable behaviors. Despite the company’s wealth of experience, mature risk management practices and deep domain expertise, sometimes we would be caught off guard by an unexpected risk or sudden decrease in system performance. And so I began to wonder if the hidden effects which made the prediction of satellite orbits difficult, also created challenges in the predictable management of a business. Through my research and experiences, I found that the mathematical solution provided by perturbation theory was universally applicable to any dynamically complex system—including business and IT systems.

Applying Perturbation Theory to Solve Risk Management Problems

Without the ability to identify and assess the weight of dynamic complexity as a contributing factor to risk, uncertainty remains inherent in current risk management and prediction methods. When applied to prediction, probability and experience will always lead to uncertainties and prohibit decision makers from achieving the optimal trade-off between risk and reward. We can escape this predicament by using the advanced methods of perturbation mathematics I discovered as computer processing power has advanced sufficiently to support my methods perturbation based emulation to efficiently and effectively expose dynamic complexity and predict its future impacts.

Emulation is used in many industries to reproduce the behavior of systems and explore unknowns. Take for instance space exploration. We cannot successfully construct and send satellites, space stations, or rovers into unexplored regions of space based merely on historical data. While the known data from past endeavors is certainly important, we must construct the data which is unknown by emulating the spacecraft and conducting sensitivity analysis. This allows us to predict the unpredicted and prepare for the unknown. While the unexpected may still happen, using emulation we will be better prepared to spot new patterns earlier and respond more appropriately to these new scenarios.

Using Perturbation Theory to Predict and Determine the Risk of Singularity

Perturbation theory seems to be the best-fit solution for providing accurate formulation of dynamic complexity that is representative of the web of dependencies and inequalities. Additionally, perturbation theory allows for predictions that correspond to variations in initial conditions and influences of intensity patterns. In a variety of scientific areas, we have successfully applied perturbation theory to make accurate predictions.

After numerous applications of perturbation theory based-mathematics, we can affirm its problem solving power. Philosophically, there exists a strong affinity between dynamic complexity and its discovery revealed through perturbation based-solutions. At the origin, we used perturbation theory to solve gravitational interactions. Then we used it to reveal interdependencies in mechanics and dynamic systems that produce dynamic complexity. We feel strongly that perturbation theory is the right foundational solution of dynamic complexity that produces a large spectrum of dynamics: gravitational, mechanical, nuclear, chemical, etc. All of them represent a dynamic complexity dilemma. All of them have an exact solution if and only if all or a majority of individual and significant inequalities are explicitly represented in the solution.

An inequality is the dynamic expression of interdependency between two components. Such dependency could be direct (e.g. explicit connection always first order) or indirect (connection through a third component that may be of any order on the base that the perturbed perturbs). As we can see, the solutions based on Newton’s work were only approximations of reality as Newton principles considered only the direct couples of interdependencies as the fundamental forces.

We have successfully applied perturbation theory across a diverse range of cases from economic, healthcare, and corporate management modeling to industry transformation and information technology optimization. In each case, we were able to determine with sufficient accuracy the singularity point—beyond which dynamic complexity would become predominant and the predictability of the system would become chaotic.

Our approach computes the three metrics of dynamic complexity and determines the component, link, or pattern that will cause a singularity. It also allows users to build scenarios to fix, optimize, or push further the singularity point. It is our ambition to demonstrate clearly that perturbation theory can be used to not only accurately represent system dynamics and predict its limit/singularity, but also to reverse engineer a situation to provide prescriptive support for risk avoidance.

More details on how we apply perturbation theory to solve risk management problems and associated case studies are provided in my book, The Tyranny of Uncertainty.

[1] Abu el Ata, Nabil. Analytical Solution the Planetary Perturbation on the Moon. Doctor of Mathematical Sciences Sorbonne Publication, France. 1978.

Understanding Dynamic Complexity

/in Key Concepts /by Nabil Abu el AtaComplexity is a subject that everyone intuitively understands. If you add more components, more requirements or more of anything, a system apparently becomes more complex. In the digital age, as globalization and rapid technology advances create an ever-changing world at a faster and faster pace, it would be hard not to see the impacts of complexity, but dynamic complexity is less obvious. It lies hidden until the symptoms reveal themselves, their cause remaining undiscovered until their root is diagnosed. Unfortunately, diagnosis often comes too late for proper remediation. We have observed in the current business climate that the window of opportunity to discover and react to dynamic complexity and thereby avoid negative business impacts is shrinking.

Dynamic Complexity Defined

Dynamic complexity is a detrimental property of any complex system in which the behaviorally determinant influences between its constituents change over time. The change can be due to either internal events or external influences. Influences generally occur when a set of constituents (1…n) are stressed enough to exert an effect on a dependent constituent, e.g. a non-trivial force against a mechanical part, or a delay or limit at some stage in a process. Dynamic complexity creates what was previously considered unexpected effects that are impossible to predict from historic data—no matter the amount—because the number of states tends to be too large for any given set of samples.

Dynamic complexity—over any reasonable period of time—always produces a negative effect (loss, time elongation, or shortage), causing inefficiencies and side effects, similar to friction, collision or drag. Dynamic complexity cannot be observed directly, only its effects can be measured.

Static vs. Dynamic Complexity

To understand the difference between complexity (a.k.a. static complexity) and dynamic complexity, it is helpful to consider static complexity as something that can be counted (a number of something), while dynamic complexity is something that is produced (often at a moment we do not expect). Dynamic complexity is formed through interactions, interdependencies, feedback, locks, conflicts, contentions, prioritizations, enforcements, etc. Subsequently, dynamic complexity is revealed through forming congestions, inflations, degradations, latencies, overhead, chaos, singularities, strange behavior, etc.

Human thinking is usually based on linear models, direct impacts, static references, and 2-dimensional movements. This reflects the vast majority of our universe of experiences. Exponential, non-linear, dynamic, multi-dimensional, and open systems are challenges to our human understanding. This is one of the natural reasons we can be tempted to cope with simple models rather than open systems and dynamic complexity. But simplifying a dynamic system into a closed loop model doesn’t make our problems go away.

Dynamic Complexity Creates Risk

With increasing frequency, businesses, governments and economies are surprised by a sudden manifestation of a previously unknown risk (the Fukushima Daiichi Nuclear Disaster, the 2007-2008 Financial Crisis, or Obamacare’s Website Launch Failure are a few recent examples). In most cases the unknown risks are caused by dynamic complexity, which lies hidden like a cancer until the symptoms reveal themselves.

Often knowledge of the risk comes too late to avoid negative impacts on business outcomes and forces businesses to reactively manage the risk. As the pace of business accelerates and decision windows shrink, popular methods of prediction and risk management are becoming increasingly inadequate. Real-time prediction based on historical reference models is no longer enough. To achieve better results, businesses must be able to expose new, dangerous patterns of behavior with sufficient time to take corrective actions—and be able to determine with confidence which actions will yield the best results.

Portfolio Items

Contact

Business Hours

Our support Hotline is available 24 Hours a day: (555) 343 456 7891

- Monday-Friday: 9am to 5pm

- Saturday: 10am to 2pm

- Sunday: Closed